What is Multi-Agent Orchestration?

Previously, we’ve defined AI orchestration as “the automation and coordination of multiple AI models, agents, and systems to create complex, efficient, and scalable systems”. We broke this definition down into seven key tenets: (i) integrations, (ii) permissions, (iii) agency, (iv) memory, (v) human-in-the-loop, (vi) observability, and (vii) scalability.

For today’s discussion, we’ll focus on the last tenet and unpack how multi-agent orchestration operates, as well as how they can be aided by third-party solutions such as Credal.

What is a multi-agent system?

A multi-agent system brings together multiple AI agents to collaborate on one overarching goal. This is a newer approach within AI computing, as what most people call “agentic workflows” today tends to refer to siloed, single-agent implementations.

Why are single AI agents not enough?

While agents are designed to operate autonomously towards a task by observing context to then form decisions and execute the necessary actions, there are still clear constraints to this method. In complex enterprise environments, the overwhelming abundance of context can confuse an agent instead of help it.

Consider what would happen if a single employee were expected to master internal policies, data infrastructure, Salesforce records, engineering issue tracking, product data, and every single document in the Google Drive. They would never be able to get any proper work done as the cognitive burden would be unmanageable. AI agents work the same way; some context is essential, but too much causes them to lose clarity.

Why multi-agent?

Specialization allows AI agents to excel with deeper domain knowledge and accuracy. An agent focused only on sending emails, for example, can become highly reliable at that task. Remember, sending an email requires several sources of context: company communication guidelines, product information to craft the messages, contacts to send emails to, and policies on email volume. But by concentrating exclusively on email, the agent effectively becomes the organization’s mailman.

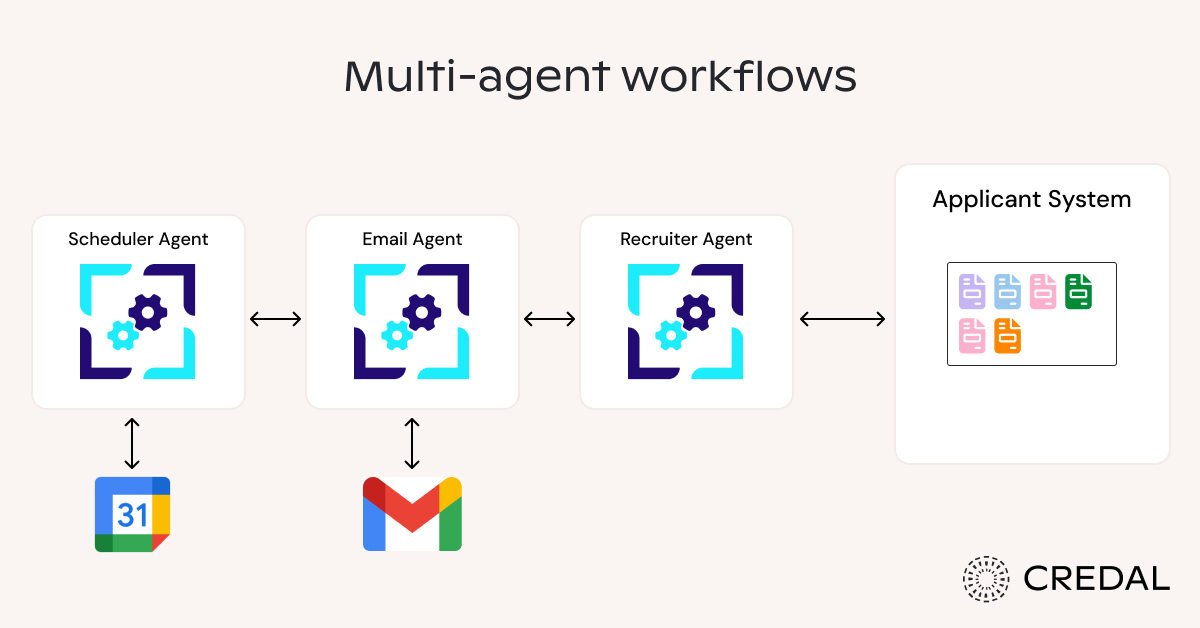

Dividing responsibilities among specialized agents is a force multiplier for AI, not a limitation. A recruiting agent can concentrate on screening candidates and coordinating follow-up interviews. This agent takes in multiple sources of context: the company’s open roles, hiring philosophy, and the hiring platform’s candidate records. When it’s time to schedule the interview though, a recruiting agent would have to learn all the redundant context of the email agent—or, it could simple interface with the email agent. Together, they make up an effective multi-agent workflow!

At a high level, multi-agent boils down to: (i) specialization over monoliths, avoiding single “do-everything” agents that get buried in context, and (ii) cross-agent communication to get work done.

What are some examples of multi-agent workflows?

To illustrate when multi-agent workflows can be useful, let’s take a look at some examples. Keep in mind that these are all real-world use cases, which can be easily spun up in platforms like Credal thanks to its pre-buit integrations.

For the sake of readability, we’ll name each of the agents and mark them in italics.

Recruiting Workflow

Imagine a recruiting workflow to evaluate candidates and do the first round of screening.

For this workflow, an Applications Evaluator Agent works with an Email Outreach Agent and a Scheduler Agent. The goal is to evaluate each application and then schedule interviews with strong candidates. By splitting the work amongst multiple agents, the evaluator can focus on evaluations alongside the email and scheduler agents, which are fed context on the company’s voice, letterhead, and calendar data.

Customer Support Triage Workflow

Imagine a customer support workflow that triages issues so that humans can better manage their time.

Here, an Intent Classifier Agent routes issues to a Knowledge Base Retrieval Agent. The Intent Classifier Agent also delegates tasks to a Remediation Agent, which reaches out to a Policy Guard Agent when it needs consultation on the business’s policies.

The system uses a publish/subscribe structure to handle ticket updates and enforces approval requirements for any destructive remediation actions, providing effective oversight of customer issue resolution.

FinOps / Revenue Operations Workflow

Imagine a revenue system that looks out for anomalies and notifies the right people.

A Report Compiler Agent interacts with both an Anomaly Detector Agent and an Account Owner Notifier to manage financial operations. This bidirectional system incorporates rate-limited outreach as to not flood account owners and keeps detailed audit logs of all financial monitoring activities, establishing a balanced approach to financial operations management.

So what’s the problem? Multiple agents dramatically increase the attack surface.

Earlier, we highlighted the seven tenets of AI orchestration. When multiple agents are at play, then some in particular (permissions, memory, and observability) become noticeably harder to manage.

Permissions and Memory

Permissions and Memory go are closely linked when it comes to AI agents, especially from a security perspective.

One tenet of safe AI agents is that they inherit the permissions of their dispatchers. So if a user has access to X, the agent should also. Conversely, if a user cannot access Y, the agent must be restricted as well to prevent accidental data leaks.

This relies on permissions mirroring, which becomes a lot trickier to implement in multi-agent workflows. For instance:

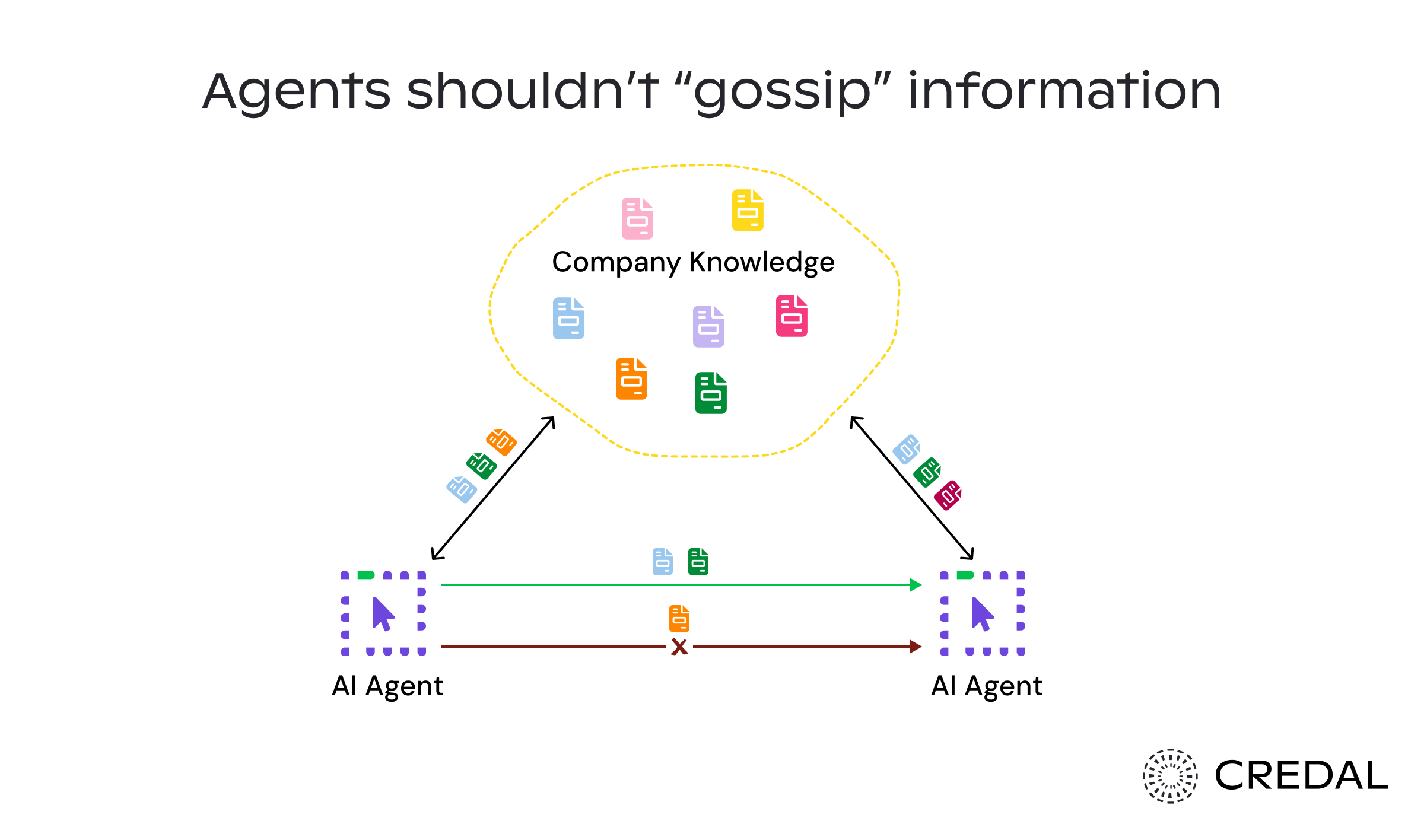

- User A prompts Agent A. User B prompts Agent B. User A and User B have different sets of permissions; if Agent A leaks sensitive data to Agent B, then User B will gain information they shouldn’t have access to!

- Communicating Agent A and Agent B’s respective permissions needs to be cross-communicated to plan and prevent a leak.

- Even if you only gave Agent A context restricted to what Agent B can access, Agent A might still pull from previous context in its memory that had nothing to do with its current interaction with Agent B.

The fix? Enforce strict boundaries within the AI Orchestration framework; Agent A and Agent B should never share information that they don’t both have mutual access to. This includes prevent either agent from drawing on memory that could inadvertently expose sensitive data.

Such a framework involves four specific principles:

- Memory partitions must be used: Memory should be segmented and tagged per‑agent, per‑team, and shared/global with policy‑gated access.

- Purpose‑bound queries: Agents may retrieve only what is relevant to their current task.

- Redaction/transform hooks: Data is sanitized (redacted, transformed, etc.) before sharing.

- Provenance tags: Every memory item should have a source, owner, and ACL.

Another approach is to have a human in the loop. If an agent needs to work with an agent it hasn’t interacted with before, it must first get explicit permission from a human. This serves as an extra safety measure and doesn’t replace any of the tenets we've already discussed.

You’re probably thinking: does this limit AI agent performance? The short answer is yes. But with a well-designed AI orchestration solution, organizations can implement permissions mirroring in a way that preserves contextual access and inner-agent collaboration while preventing leaks. Following these rules is an optional though, especially compliance standards like GDPR, PCI DSS v4, and CCPA imposing penalties your data mishandling.

This is why solutions like Credal were built. AI is exciting, but businesses need agentic workflows to be enterprise-ready. Credal helps keep sensitive data safe and compliant so that company resources can focus on their core product.

Observability

Observability is straightforward for single agents: tracking its reads and writes is enough. In a multi-agent setup, however, observability must capture and visualize paths between agents.

This is similar to request tracing in traditional systems. Modern observability is a system of stack traces that track each request and the downstream requests it generates throughout the system. We need the same visibility in AI agents—monitoring who called whom, with what context, and what tools or actions were invoked.

For these paths to actually be useful, they must include trace IDs, spans per agent/action, decision logs (including permission checks), data access logs, and cost/latency metrics.

Observability data should be accessible through two primary views: (i) a trace-focused view for debugging individual sessions and (ii) a topological view that shows agent interactions, highlights hot paths, and identifies bottlenecks.

Why do we care?

- Compliance: Observability helps us stay compliant with broad security tenets of SOC 2 and PCI DSS by letting us trace any sensitive information that might bubble through a system.

- Security. In something goes wrong, we can trace the exact sequence of events.

- Debugging. Detailed traces allow developers to pinpoint exactly where the process failed.

These are key reasons why Credal implemented important observability features, such as the aforementioned access tracking.

Challenge: Agents need to be able to communicate

Observability, memory, and permissions are challenges to both single agents and multi-agent systems. Coordinating agents to collaborate, however, is a challenge you only see in multi-agent workflows.

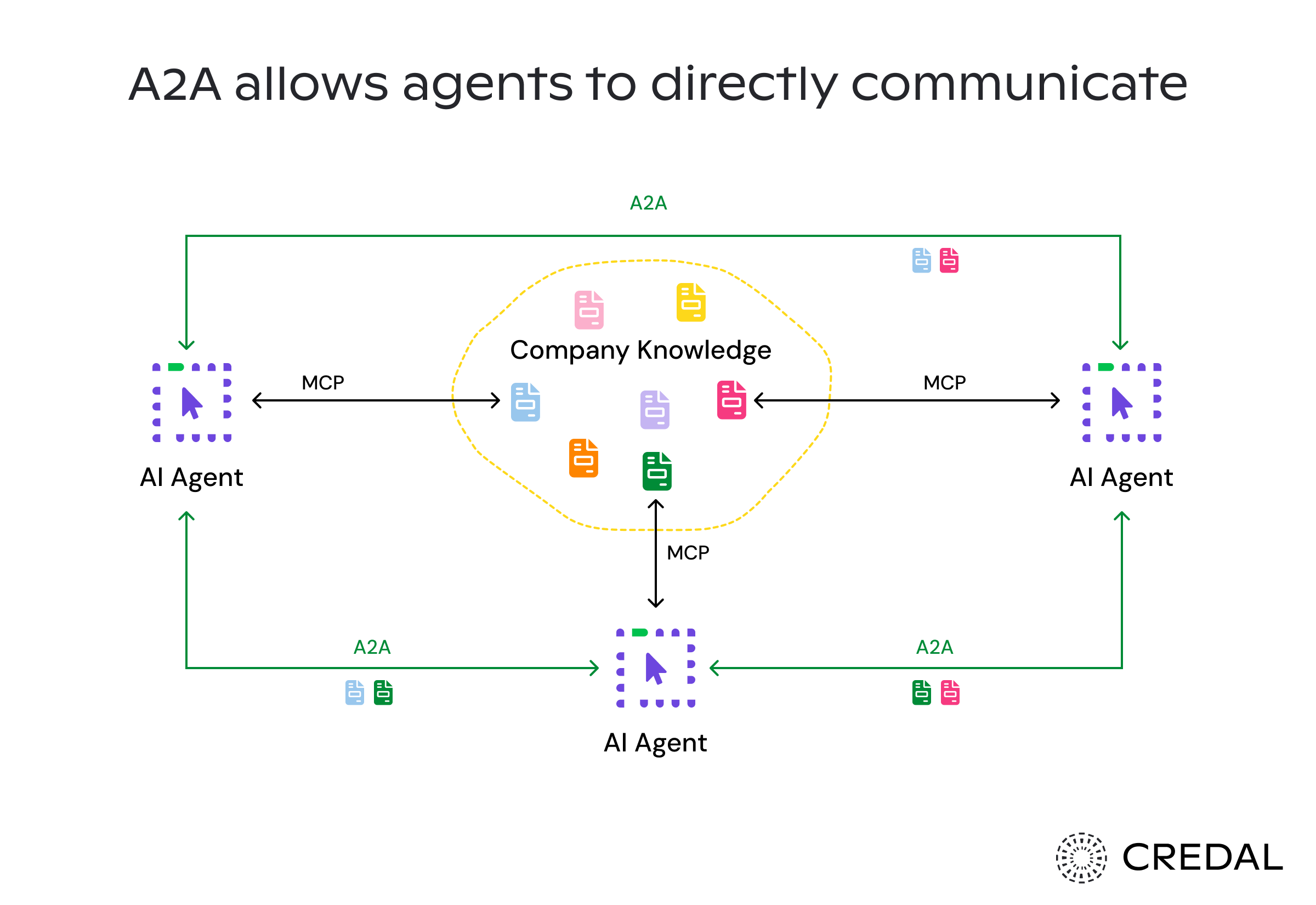

A2A

A2A (Agent-to-Agent) is a protocol developed by Google for effective communication between AI agents in multi-agent systems. A2A aims to standardize agent interactions with structured message formats that convey task specifications, context pointers, and correlation IDs.

When agents communicate, you get the usual network headaches: retries, timeouts, and idempotency (a guarantee that the same actions don’t run multiple times). A2A prevents these issues with a shared protocol between agents that handles communicate failures gracefully.

Thus, it’s best for a multi-agent orchestration platform to support A2A communication, or a similar framework, so that intra-agent communication can happen without interruption.

Discovery

Another challenge is actually establishing agent-to-agent connections. Manually connecting agents is slow and requires humans to do a significant portion of work—essentially turning multi-agent workflows into fancy Zapier chains. That works for simple, predictable tasks, but agents need to be able to find information and take action themselves. This is crucial for deeper research tasks, like checking if a sales email follows compliance rules and company policies, when relevant data is scattered across different locations.

An effective multi-agent orchestration solution should allow agents to interact and collaborate autonomously. This means maintaining an agent registry, where each agent has a profile detailing its profile and capabilities.

Orchestrator Agent

Certain multi-agent workflows benefit from having an orchestration agent responsible for connecting agents and delegating tasks. This orchestrator agent acts like a human manager, streamlining discoverability and reducing redundancy by serving as a single source of truth. This approach is often called the hub-and-spoke model.

At its core, an orchestrator agent is just another agent—it communicates via A2A, stores memory for context, and runs on an LLM. The orchestration platform doesn’t have much to do in this case, but a good platform makes it easy for developers to find and inspect the orchestrator’s data, which is usually the most important information.

Challenge: Cost and Performance Controls

As multi-agent systems scale, so do expenses. Agents rely on LLMs, which incur token-based charges, and complex multi-agent workflows might make non-trivial API requests with high data throughput.

To reduce the risk of an outage, an AI orchestration platform should automatically flag any time a threshold is crossed so that a human can step in and assess the situation.

How Credal is approaching this

Credal is an AI orchestration platform designed specifically for scalable AI operations. Multi-agent orchestration is central to Credal’s mission and product. Using Credal, you can launch managed agents with ready-access to tools, including pre-built integrations to third-party data sources (e.g. Snowflake, Dropbox, or Google Drive). Credal also supports multiple agents, making it easy for agents to discover and work together.

Multi-agent workflows are inherently complex and require careful management. If you are interested in discovering how Credal works, please sign up for a demo today!